Model Parallelism

This section is available as slides which is presented on the workshop. This text version include some additional notes. You can also access the slide version here.

Overview

- Parallelization schemes that are useful for LLM;

- Common tools that for running LLMs in parallel;

Strategies

Data parallelism (DP)

Data parallelism (DP)

Image source: ultrascale

playbook

- Trivial to implement;

- Syncing overhead for training;

- Memory cost due to replication.

Overlapping in DP

Image source: ultrascale

playbook

- Overlapping reduces communication overhead.

Sharding in DP

Image source: ultrascale

playbook

- Sharding reduces memory usage;

- Three levels of sharding (optimizer, gradients,weights);

- ZeRO-methods / FSDP2.

Expert (MoE) parallelism

Image source: ultrascale

playbook

- Activate only subsets of experts per token;

- Similar to sharding, but reduce need for data exchange.

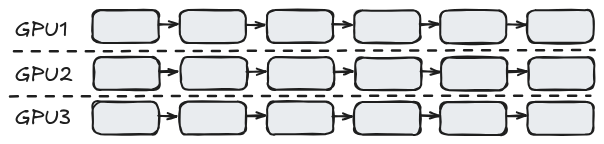

Tensor parallelism

Tensor parallelism

Image source: ultrascale

playbook

- row vs. column version;

- needs to be carefully designed;

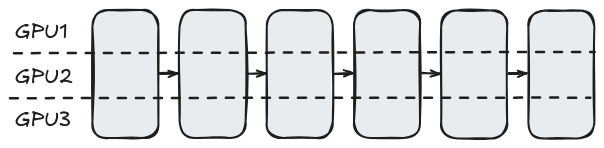

Tensor parallelism (cont.)

Image source: ultrascale

playbook

- expensive communication;

- efficiency reduce with inter-node parallelization;

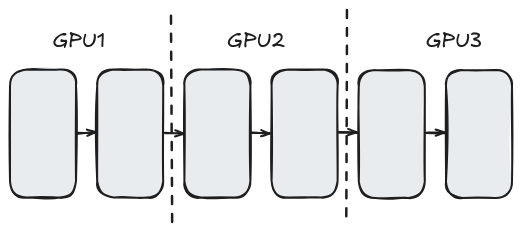

Pipeline parallelism (PP)

Pipeline parallelism (PP)

Image source: ultrascale

playbook

- Training: bubbling problem;

- Can be mitigated by smaller batch size (and accumulating the gradients);

Pipeline parallelism (cont.)

Image source: ultrascale

playbook

- More strategies exist;

- Balancing, bubble, memory and communication;

- Implement is not trivial.

Hybrid / 3D parallelism

Image source: ultrascale

playbook

- For really large models one need to combine the techniques;

Implementations

Distributed computing - MPI

# just a simple script

...

hvd.init()

model = Model(...)

optimizer = hvd.DistributedOptimizer()

model.compile( ... )

model.fit( ... )

...

# and run in a job script

srun python train.py- General-purpose HPC communication;

- Integrates well with the cluster;

- Not so popular in AI/ML.

Distributed computing - Ray

ray.init()

@ray.remote

def preprocess(data):

...

@ray.remote

def train(model_name, dataset):

...

cleaned = [preprocess.remote(...) for data in dataset ]

trained_models = [train.remote(...) for data in cleand]

results = ray.get(trained_models)- Python-native distributed orchestration;

Distributed computing - Ray (cont.)

# start ray head

srun -J "head ray node-step-%J" \

apptainer exec ${SIF_IMAGE} ${RAY_CMD_HEAD} &

RAY_HEAD_PID=$!

# start ray worker

srun -J "worker ray node-step-%J" \

apptainer exec ${SIF_IMAGE} ${RAY_CMD_WORKER} &

sleep 10

# start the actual script

apptainer exec ${SIF_IMAGE} vllm serve ${HF_MODEL} \

--port ${API_PORT} ${vllm_opts}- Run in server/client mode;

- Needs more work to configure.

Distributed computing - torchrun (Elastic Launch)

export GPUS_PER_NODE=4

export MASTER_ADDR=${PROEPI_HEAD_NODE}

export MASTER_PORT=${PROEPI_FREE_PORT}

srun python -u -m torch.distributed.run \

--nproc_per_node $GPUS_PER_NODE --nnodes $SLURM_NNODES --node_rank $SLURM_PROCID \

--rdzv_id=$SLURM_JOB_ID --rdzv_backend=c10d --rdzv_endpoint=$MASTER_ADDR:$MASTER_PORT \

$ARGSPopular training frameworks

- PyTorch DDP: standard (basic) DP in PyTorch;

- PyTorch FSDP: improved with sharding;

- DeepSpeed: implements advanced schemes (most sharding);

- Megatron-LM: Nvidia’s implementation (tensor-parallelism);

- Other options: NeMo, Colossal-AI, FairScale, …

Popular inference frameworks

- vLLM: PagedAttention and dynamic batching;

- DeepSpeed: fused attention;

- Triton: NVIDIA’s inference platform;

Summary

Take home-message

- Enough memory: use data parallelism;

- On a single node: prefer tensor parallelism;

- On many nodes: user pipeline parallelism;

- For training: the ultrascale playbook;

- For inference: use a inference engine.